Storage is one of the most critical resources in vSphere environment. There are some important parameters during design of storage that should be taken into consideration such as block size, disk types, required I/O, bandwidth etc. The mentioned parameters generally are used to design a storage but unfortunately, there is one important thing that can be overlooked. This is Queue Depth (QD).

In this post we discuss Storage Queue Depth and you can find answers for the following questions:

- Basic definitions: Fan-out/Fan-in ratios, port queue depth.

- Why Queue Depth is important?

- How many hosts can I connect to array?

- How to determine what should be the queue depth on a initiator? How to tune it?

Some basic storage definition

Fan-out ratio (Storage Port to Host Port) shows the number of hosts that are connected/attached to a single port of a storage array.

Fan-in ratio (Host Port to Target Port) shows the number of storage ports can be served from a single host channel.

Port queue depth shows the number of requests are placed in the port queue to be serviced when the current one is complete.

Importance of Queue Depth (QD)

Queue depth is the number of commands that the HBA can send / receive in a single chunk - per LUN. When you exceed the queue depth on target, you should expect performance degradation. When too many concurrent I/Os are sent to a storage device, the device responds with an I/O failure message of queue full (qfull). This message is intended to cause the host to try the I/O again a short time later. There is possible to control LUN queue depth throttling in VMware ESXi. For more information please follow KB here.

How many vSphere hosts can be connected to array?

In general, the number of servers an array can adequately support is dependent upon following things:

- Queues Depth per Physical Storage Port.

- Number of Storage Ports.

- Bandwidth (throughput) per Controller.

(Queues Depth per Physical Storage Port x Storage Ports) / Host Queue Depth = Number of servers

So for example, we have an 8 port array (4 ports per controller) with 1024 queues per storage port and a host (LUN) queue depth setting of 64 will be able to connect up to:

( 8 x 1024) / 64 = 128 single connected servers or 64 (2 x HBA) or 32 (4 x HBA)

The fan-out ratio is 16:1 meaning 16 initiators per storage port.

What's about VMware infrastructure and multiple ESXi hosts communicating with the storage ports? The Queue Depth should be calculated by the following formula:

Port-QD => ESXi Host 1 (P * QD * L) + ESXi Host 2 (P * QD * L) ..... + ESXi Host n (P * QD * L)

where:

Port-QD = Target Port Queue Depth

P = Number of host paths connected to the array target port

L = Number of LUNs presented to the host via the array target port

QD = LUN queue depth on the host

As shown above, it is recommended that the total aggregate of the maximum number of outstanding SCSI commands from all ESXi hosts connected to a port of an array should be less than the maximum queue depth of the port.

Queue Depth and VSAN

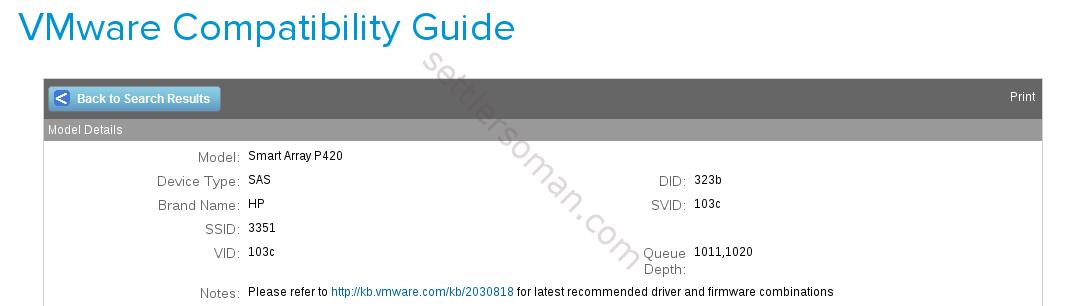

Of course, Queue Depth is crucial when you implement VSAN. You should use IO Controller being on the VMware HCL. For example, the HP Smart Array P420 Queue Depth can be 1011 or 1020:

Queue Depth tuning on vSphere

Sometimes it may be necessary to adjust ESXi/ESX hosts maximum queue depth values. For example as mentioned in VMware KB here, large-scale workloads with intensive I/O patterns might require queue depths significantly greater than Paravirtual SCSI default values. There are two things that should be changed then:

- The default Queue Depth values for HBA (QLogic, Emulex or Brocade).

- The Maximum Outstanding Disk Requests (

Disk.SchedNumReqOutstandingparameter)

For example, if you use QLogic HBA (the default queue depth is 64 in ESXi 5.x-6.0) and ESXi 5.5 hosts you should run the following command:

-

Verify which HBA module is currently loaded by entering one of these commands on the esxcli (via SSH):

esxcli system module list | grep qln

-

Run the following command:

esxcli system module parameters set -p qlfxmaxqdepth=128 -m qlnativefc

- Reboot your host.

-

Run this command to confirm that your changes have been applied:

esxcli system module parameters list -m qlnativefc

-

Check Maximum Outstanding Disk Request:

esxcli storage core device list -d naa.xxx

where naa.xxx is your LUN id.

-

To modify the current value for a device, run the command:

esxcli storage core device set -d naa.xxx -O value

Where Value is between 1 and 256

Changing above options should be followed by careful and well-thought decision. For more information please follow VMware KB here.

Conclusion

You should carefully consider the number and load of hosts connected to an array. The most important is understanding and analyzing your IOPs request configuration between Initiators and Targets. If you can dedicate ports for high performance applications, make sure the queue depth is configured appropriately. Also keep the HBA vendor (or at least the Queue Depth value) uniform across all hosts participating in a Cluster of Hosts.