During preparation to the upgrade of Nexus 1000v (for my customer), I recollected that I have some posts waiting in the "queue" to be completed and published 🙂 One of such post is related to design of L3 Control interface used for VSM-VEM traffic.

Cisco Nexus 1000v is very popular and still supported in VMware environments (vSphere 6.0 also as you can read here).

The Nexus 1000V VSM VM has three network interfaces.

- Control VLAN (control0) - is used to communication with VEMs and VSM partner (standby VSM). This is the first interface on the VSM (labeled “Network Adapter 1” in the virtual machine network properties).

- Management VLAN (mgmt0) - is used by VSM Management interface (Mgmt0) to communicate with a vCenter to publish port configuration, remote access etc. This is the second interface on the VSM (labeled “Network Adapter 2” in the virtual machine network properties).

- Packet VLAN - is used by VEM to forward any control packet (eg. CDP) received from upstream network to VSM for further processing. This is the third interface on the VSM (labeled “Network Adapter 3” in the virtual machine network properties).

The VEM-to-VSM Network Communication can be based on:

- Layer 2 mode for control and packet and there are must be in the same Layer 2 domain (same VLAN).

or

- Layer 3 mode.

As you can read here, Cisco recommends Layer 3 mode (L3 controls uses UDP and port 4785).

So what are the most important advantages to use L3? There are some as follows:

- Much easier troubleshooting (for example possible ping and another tools available just for L3).

- Packet VLAN is not needed and used.

In my opinion the only one disadvantage is IP addresses and VLAN requirements depending on the VSM-VEM communication options described later in this post.

Depending on number of consumed VMkernel interfaces, there are three L3 Control design scenarios are as follows:

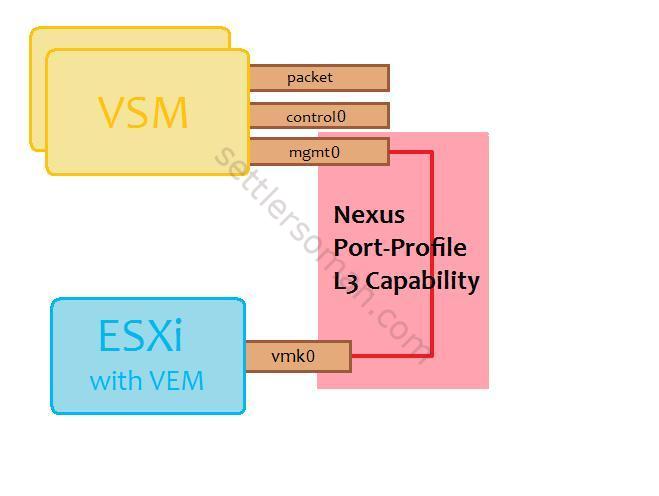

- VSM (mgmt0) to VEM (vmk0)

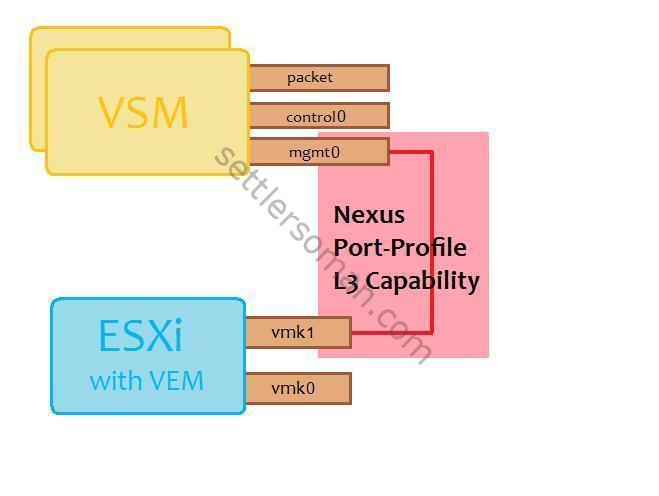

- VSM (mgmt0) to VEM (vmk1)

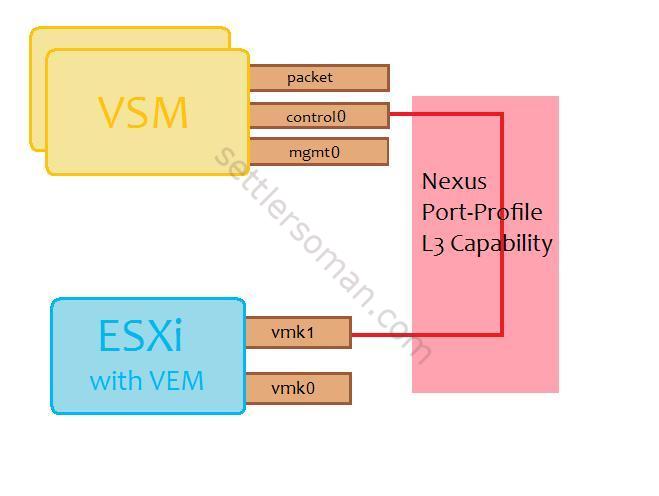

- VSM (control0) to VEM (vmk1)

where vmk0 is a ESXi management interface and vmk1 is a dedicated vmkernel interface for control.

In scenario nr 1 VSM-to-VEM traffic is based on management interface of VSM and ESXi hosts. This is the easiest deployment but requires the migration of vmk0 to the Nexus 1000v port profile with L3 capability.

Cons:

- Less vmkernel interfaces = physical uplinks and IP addresses are consumed on ESXi host.

Pros:

- vmk0 interface needs to be migrated to the VSM port profile

In scenario nr 2 VSM-to-VEM traffic is based on management interface of VSM and dedicated vmkernel interface on ESXi host.

Cons:

- Dedicated vmkernel interface (vmk1) of ESXi host. vmk0 is not needed to be migrated so there is available to have a hybrid implementation (ESXi management on vSwitch0, VMs on Nexus 1000v)

Pros:

- More vmkernel interfaces = more physical uplink and IP addresses are consumed on ESXi host.

In scenario nr 3 VSM-to-VEM traffic is based on dedicated interface of VSM (control0) and dedicated vmkernel interface (vmk1) of ESXi host.

Cons:

- Dedicated vmkernel interface (vmk1) of ESXi host. vmk0 is not needed to be migrated so there is available to have a hybrid implementation (ESXi management on vSwitch0, VMs on Nexus 1000v)

Pros:

- More vmkernel interfaces = more physical uplink and IP addresses are consumed on ESXi host.

Ok, so we have discussed some Nexus 1000v L3 design possibilities. Which one should we use? Of course, the answer is: depends on 🙂

I use scenario 2 because I'm an old school man and I like leaving ESXi mgmt interface in vSwitch0 🙂 but the easiest (also recommended by Cisco) and consumes at least of all interfaces is scenario nr 1.

Update 18.09.2015: There is a bug so you should follow my post here.